Jonathan Carter Blog

My first tag2upload upload

Tag2upload? The tag2upload service has finally gone live for Debian Developers in an open beta. If you’ve never heard of tag2upload before, here is a great primer presented by Ian Jackson and prepared by Ian Jackson and Sean Whitton. In...

Debian Day South Africa 2024

Beer, cake and ISO testing amidst rugby and jazz band chaos On Saturday, the Debian South Africa team got together in Cape Town to celebrate Debian’s 31st birthday and to perform ISO testing for the Debian 11.11 and 12.7 point...

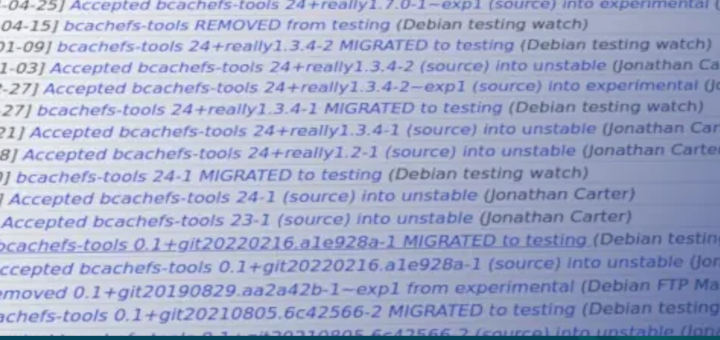

Orphaning bcachefs-tools in Debian

Around a decade ago, I was happy to learn about bcache – a Linux block cache system that implements tiered storage (like a pool of hard disks with SSDs for cache) on Linux. At that stage, ZFS on Linux was...

DebConf24 – Busan, South Korea

I’m finishing typing up this blog entry hours before my last 13 hour leg back home, after I spent 2 weeks in Busan, South Korea for DebCamp24 and DebCamp24. I had a rough year and decided to take it easy...

DebConf23 – Kochi, India

I very, very nearly didn’t make it to DebConf this year, I had a bad cold/flu for a few days before I left, and after a negative covid-19 test just minutes before my flight, I decided to take the plunge...

Debian 30th Birthday: Local Group event and Interview

Inspired by the fine Debian Local Groups all over the world, I’ve long since wanted to start one in Cape Town. Unfortunately, there’s been many obstacles over the years. Shiny distractions, an epidemic, DPL terms… these are just some of...

CLUG Talk: Running Debian on a 100Gbps router

Last night I attended the first local Linux User Group talk since before the pandemic (possibly even… long before the pandemic!) Topic: How and why Atomic Access runs Debian on a 100Gbps router Speaker: Joe Botha This is the first...

Phone upgraded to Debian 12

A long time ago, before the pandemic, I bought a Librem 5 phone from Purism. I also moved home since then, and sadly my phone was sleeping peacefully in a box in the garage since I moved. When I was...

MiniDebConf Germany 2023

This year I attended Debian Reunion Hamburg (aka MiniDebConf Germany) for the second time. My goal for this MiniDebConf was just to talk to people and make the most of the time I have there. No other specific plans or...

Upgraded this host to Debian 12 (bookworm)

I upgraded the host running my blog to Debian 12 today. My website has existed in some form since 1997, it changed from pure html to a Python CGI script in the early 2000s, and when blogging became big around...

Debian Reunion MiniDebConf 2022

It wouldn’t be inaccurate to say that I’ve had a lot on my plate in the last few years, and that I have a *huge* backlog of little tasks to finish. Just last week, I finally got to all my...

What are the most important improvements that Debian need to make?

“What are the most important improvements that Debian need to make?” – I decided to see what all the fuss is about and asked ChatGPT that exact question. It’s response: I find that to be a great response, and I’m...

Free Software Activities for 2021-09

Here’s a bunch of uploads for September. Mostly catching up with a few things after the Bullseye release. 2021-09-01: Upload package bundlewrap (4.11.2-1) to Debian unstable. 2021-09-01: Upload package calamares (3.2.41.1-1) to Debian unstable. 2021-09-01: Upload package g-disk (1.0.8-2) to...

Free software activities for 2021-04

Here are some uploads for April. 2021-04-06: Upload package bundlewrap (4.7.1-1) to Debian unstable. 2021-04-06: Upload package calamares (3.2.39.2-1) to Debian experimental. 2021-04-06: Upload package flask-caching (1.10.1-1) to Debian unstable. 2021-04-06: Upload package xabacus (8.3.5-1) to Debian unstable. 2021-04-06: Upload...